Home | About Me | Services | Blog and Case Studies | Zetta

Without Data Governance, "AI Analytics" is Meaningless

New tools are constantly lowering the technical bar for conducting data analysis, but thoughtfulness and intention are still required to get value out of that analysis.

2025-05-29

Introduction

AI hype - or, to be more specific, LLM hype - is starting to bleed into conversations about data analytics in earnest. “Imagine a world where non-technical users can get answers right away from unstructured data, without cumbersome dashboards or expensive data infrastructure” would be a fair summary of these visions (at the risk of constructing a straw man).

I too would like to see the bar lowered for access to data, but I still feel like something is missing here. Maybe I’m just worried about being out of a job - after all, I do charge a generous hourly rate to put together boilerplate data pipelines and data models for analytics. But, I think it’s more than that: unleashing the full power of LLMs on a company’s data (regardless of how dubious that power is) might be an unblocker in some ways, but it also risks exacerbating a lot of the chaos that already exists for analytics teams without good data governance. I believe that this is a problem that AI and LLMs fundamentally can’t solve; so, as accessing and aggregating data becomes trivial, governance will become even more important.

Why is Data Governance Still Important?

When it comes to the limitations of AI, author Ted Chiang put it best when he said:

Language is, by definition, a system of communication, and it requires an intention to communicate.The end goal of data analytics is communication, and it too requires a high degree of intention that can’t be provided by an LLM. Answers to questions like: what does it mean for our business to be successful? What are we focusing on this quarter? These provide the intent that contextualizes and drives the additional questions that we attempt to answer with analysis.

Now, you can certainly ask ChatGPT or Gemini what it thinks about your company’s goals, and they might give you some decent answers, but they can’t force you actually commit to and align on those answers. This is where data governance comes into play: if the intention behind your company’s goals and perspective are embedded in the metrics and dimensions that you use to analyze data, this ensures that analysis and reporting is always consistent with respect to these goals. The goals need not be perfect; it’s the consistency that’s most important.

The issue with fully LLMifying data analysis is not that you’ll get wrong answers (though, I expect there will be plenty of those). The issue is, again, consistency. If someone is aggregating insights from raw data, the answer that they’ll receive will vary subtly based on how the question was asked. Imagine that you have two different teams with different perspectives who need to align on a shared goal: they could easily come to different conclusions from the same raw data.

Story Time: The Unintelligible Metrics Review

At a previous full-time job, I attended a recurring cross-functional check-in with representatives from the sales, marketing, product, and other teams. Every week the sales team would deliver their update by rattling off a list of metrics, most of them unfamiliar three-letter initialisms. My eyes would glaze over; there were no follow up questions or requests for clarification. I would find myself wondering can anyone tell me if the sales motion is going well?! Who’s holding the team accountable? Why are we even bothering to have a meeting?

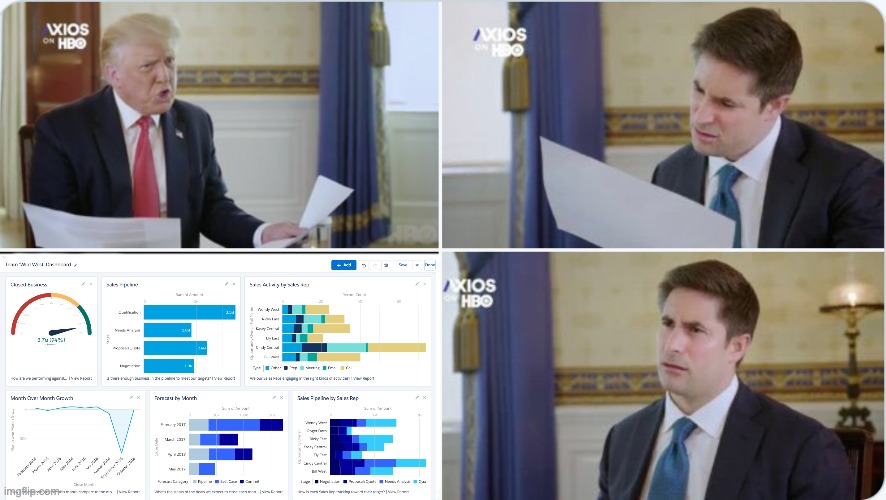

When I look at a "sales dashboard" that somebody else made

This is exactly how I see the future of “democratized” data analysis: a whole lot of nonsense that might technically be derived from valid data and might make sense to the people who compiled it, but serves no useful purpose in a cross-functional context.

Unfortunately, a lot of the people compiling this data might not care; they’re more concerned, like the offenders in my story, about covering their asses and appearing sufficiently “data driven” for the “data hungry CEO” (read: likes to squint at tables).

What Exactly do I Mean by Data Governance?

Data governance, for me, is relatively simple: start with your key metrics, codify them, and work backwards from there. But, there are still some finer points to consider:

- Define your metrics thoughtfully and collaboratively: give space to consider the company’s objectives at the highest level and constantly re-evaluate. For each individual team, solicit contributions and feedbacks from other teams so that everyone understands everyone else’s goals and how they fit into the big picture.

- Build your data models and dashboards with a focus on these metrics: keep data infrastructure as lean as possible by building the bare minimum to express these key metrics and any dimensions or auxiliary metrics that might provide insight. I typically will start by determining how many reporting tables I need, and their respective grains, before working backwards into any intermediate models that are necessary.

This philosophy is at the core of Zetta: every chart and table that you see in a Zetta dashboard is derived from a metric that has been previously articulated and documented. This also encourages analysts and data engineers to create clean data models that they can use to pull these metrics. To the extent that someone “goes rogue” and compiles their own data, this won’t be in Zetta.

While the Zetta platform itself does a good job enforcing patterns around governance, data practitioners still need to embrace a bit of a paradigm shift when it comes to data workflows. If LLMs and other AI tools trivialize the ability to retrieve and compile data, what do data analysts do, anyway? To me, it’s clear: they do what they should have been doing this whole time! Meaning: creating persistent data assets and tools that surface metrics that reflect true intent.

It might not be art, but gods willing it should at least involve some critical thinking.